SPDIF digital signals are almost universally decoded nowadays by a certain class of digital input receiver known as Asynchronous Rate Converter (ASRC). These are most often integrated circuit chips, such as the Crystal Semiconductor CS 8420 (that particular chip is widely used but has a well known bug which is worked-around in higher quality equipment).

These make sense inside DACs as the best-possible way of handling jitter (source jitter, and carrier jitter) from the digital source clock and SPDIF itself.

Assume you have a DAC with a near perfect clock, but what you are receiving is a SPDIF input signal that contains jitter. Even if the digital source had an absolutely perfect clock, the SPDIF signal from it will contain what I am calling carrier jitter because the actual clock transitions, embedded in the signal, are affected by the signal itself. The ever varying digital signal itself will microscopically shift the "zero crossing" point enough to affect the determination of the precise clock--where the transitions occur--by enough to produce just below 200 ps of jitter. This is the price paid for not having a separate signal for the clock itself--which would not be contaminated by the signal. But note that all serial interfaces in the computer realm have this problem, and parallel interfaces which did not have virtually disappeared...because it's much cheaper to make serial interfaces that are good enough.

If all you have is 200 ps jitter, that is so benign you might as well leave it be. But source clocks themselves are never perfect, so there will be additional jitter from that, and any mismatch in the speed of the source and receiver clocks will cause sample time units to be gained or lost--and that is not good.

Much has been made of the jitter issue in the subjectivist audiophile press, despite lack of evidence that jitter performance in decent equipment is audible in double blind testing, and much reason (including a major AES investigative report) to believe it would not be. Often detailed graphs of the distortion sidebands down to -160dB resulting from jitter are shown, and people obsess over jitter sideband peaks which sometimes reach -110dB. Meanwhile sometimes the same people may brush aside THD+N as high as -50dB (lovers of SET's for example) or -10dB aliases just above 20kHz (lovers of NOS for example) that can and do intermodulate downwards causing massive modulated noise. If the noise process you are concerned about only causes noise peaks at -110dB, you should not be much worried about it. Strangely, one of the best sounding DACs to me, the Denon DVD-9000 (which uses dual differential Burr Brown 1704's with an "AL24" digital filter including HDCD digital operators) has a lot of hashy looking sidebands above 10kHz starting just below -110dB and mostly just below -120dB. Most likely those neither contribute to nor detract from the good sound. And even those are likely not caused by the synchronous (I believe) digital interface but by the BB 1704's (the best R2R chip ever made, but not as low noise as the best Sigma Delta chips not too long after) and the fancy Denon-proprietary digital filter. Does R2R have a fundamental advantage? Well first it should be said the 1704's were quite good and better than the mainstream sigma delta chips for some time in ordinary SINAD measurements. But secondly, the exact dynamic performance benefits, if any, of R2R chips may need a different kinds of analysis than frequency spectrum analysis to be apparent. I've drawn a blank on that myself and all the converters I use on a daily basis are very low noise and distortion Sigma Delta dacs--which do sound good to me. But I'm keeping the DVD-9000's and maybe more just for future tests....and currently for HDCD decoding as well There are two reasons I'm not rolling DACs anymore. I've been very happy with the Emotive Stealth DC-1 sonically--very pure sounding--and convenient size, price, and adjustability. My system needed 3 identical DACs until last year. Since adding the miniDSP's which convert everything to 48kHz or 96kHz for the supertweeters, the time delay will now stay fixed at different INPUT sampling rates, so I can more easily roll DACs again. But I don't think it's as rewarding as speaker testing and adjusting.).

The landmark study published in the AES concluded that jitter would have to exceed 10,000 ps to be audible.

Anyway, jitter paranoia has guided design of digital interface receiver chips since the early 1990's. And it was concluded around then that the best way to handle incoming jitter was to interpolate between the incoming digital values as they come in. In this way a precise clock at the receiver gets values from the digital interface, but they are not the original numbers from the source, they are numbers interpolated from the input but at the new clock instants. It's like you have a little computer examining the input values, and educated-guessing what the values would be at the new clock instants. A fringe benefit of this approach is that it could intrinsically change from one sampling rate to another. You could extract either a higher sampling rate or a lower one, just by asking the little computer for the guessed values more or less frequently. This kind of digital input receiver is therefore known as an ASRC.

This approach provided lower distortion than the approach of using a sloppy Phase Locked Loop (PLL) like those used previously to "lock on" to the clock of the incoming digital signal with a small buffer for the digital values. In that approach, the clock of the DAC itself would be made to approximate the incoming clock, but somewhat smoothed (to remove as much jitter as reasonably possible, including carrier jitter) but follows the clock embedded in the signal close enough so that buffer overrun or underrun never occurs. So you have to speed up and slow down the clock of the DAC itself, which is hard to do without adding more distortion.

I was long skeptical of the benefit of ASRC until I measured it myself with my Emotive Stealth DC-1 DACS, which allows you to select either the PLL mode (called "Synchronous") and the ASRC mode (called SRC). The default is SRC and when you select Synchronous, distortion rises from 0.0003% to 0.0004%. I wouldn't lose any sleep over this, but it proved to me the effect is real, and that ASRC's are pretty damned good.

But this simplistic view does not account for the possibility that not all digital audio devices are either sources or DACs. What about digital volume controls, EQ processors, Crossovers, Dynamic Filters, Displays, Limiters, and Storage Devices?

When any ASRC is used for the digital inputs of these devices, the values they are starting from on are not the original values. The are Bit Perfect Not.

If you had a long chain of such devices, all set to "flat" or no change, the digital values passed through the system would be changed by each one cumulatively. So each one may add only 0.0003% distortion+noise to the signal, but it keeps on adding up.

And if you are storing a digital signal, there is simply nothing better to do than store the original values in it. Anything else is second (or third, etc) best. The original values are the best values, having zero noise+distortion added to them, and no process which transforms those values can achieve or beat that. Meanwhile we don't care if the digital storage process takes slightly more or less time on the order of nanoseconds or more. It can wait, at zero cost in performance, for each value to come in, whenever it comes in.

My experiments indicate that ASRC's may have other even more insidious problems. There is a potential for overload from inter-sample-overs. The best-guessed new values between digital sample values can actually be above 0dB in some instances, especially with extremely highly compressed recordings. Inter-sample-overs can be as much as +6dB which is severe digital clipping. To be sure you will always avoid this problem, each digital processor must lower the digital signal going through it by 6dB. This means the dynamic range keeps dropping by 6dB for each digital stage having an ASRC input. (The presence of inter-sample-overs shows that the interpolation method used by ASRC's is not the linear interpolation we learned in High School, but a higher order interpolation method.)

This is little problem in a DAC where you can have more than the carrier number of bits operating inside the DAC itself. You can have 32 bits for an incoming 24 bit signal, giving you way more dynamic range than needed. In effect you have headroom above the headroom of the carrier signal.

But it is a huge issue for a chain of SPDIF connected DSP and storage devices (for which, BTW, the carrier jitter stays roughly the same no matter how may SPDIF interfaces are in the chain...at the end of 10 devices I still measure about 200ps jitter--same as from the input--because that's just inherent to the SPDIF carrier itself (as described above) and each synchronous interface reduces it internally to near zero with a PLL, then it goes back up to 200ps at each SPDIF output because of the SPDIF carrier itself...which all goes to show how much of an over hyped concern jitter is). Each one is going to reduce the potential dynamic range by 6dB because it has to output back into the 24 bit domain.

Fortunately, many of my devices are old school synchronous SPDIF. That especially includes my 2000 vintage Tact digital receiver, which even boasts about locking on to the clock of any of its digital inputs. And it's true of my vast army of Behringer DEQ 2496's, each an extremely flexible DSP and Display device which is sadly now discontinued. And, especially, my Alesis Masterlink, which records the exact digital values send to it, thanks to using a synchronous digital interface (CS 8416 I think).

SADLY, most new digital processing and storage devices do NOT have synchronous interfaces for SPDIF. This includes the fairly ubiquitous (as a replacement for Alesis Masterlink) Marantz PMD-580. The Marantz uses an ASRC to accept whatever digital signal is provided, from 32kHz to over 96kHz, and convert it to whatever rate you choose to record at, maximum 48kHz. There is no way to turn this "feature" off, and that is typical of digital recorders you see nowadays.

The TASCAM DA-3000, their current 2 channel flagship, does allow you to turn the SRC on or off. When I saw that, I knew I had to have this unit to replace my extremely cumbersome Alesis Masterlink, and my ASRC-centric Marantz PMD-580, to make digital recordings. (I use my Lavry AD10 as analog-to-digital converter, and I suspect it may still be better than the DA-3000--though limited to 96kHz which is fine by me--and then pipe the AES/SPDIF signal to the digital recorder.)

It took considerable time for me to figure out a way of confirming that the DA-3000 makes bit perfect recordings. And then my first measurements...which suggested it did not...were incorrect. Finally I have concluded it does make bit perfect recordings at 48kHz when fed that signal from the Marantz PMD-580.

But with one strange caveat. Once every minute or so there is an extra sample added or subtracted.

I figure now that is because the "Synchronous" option works best when you also use a separate word clock signal. I have ordered a Word Clock cable to check out this theory. If that is the explanation, I'm good.

Another possibility is that this extra sample being added or subtracted is some kind of watermark. I suspect it's not audible, but I would not be happy about it.

Using the same test procedure, I found the Alesis Masterlink is indeed Bit Perfect, though it might take one sample or so for it to lock on to a new signal. (The DA-3000 takes 7-14 samples to lock on.) The Alesis neither has nor requires a clock input or output. At the 48kHz sampling rate, it just works perfectly without one.

Method

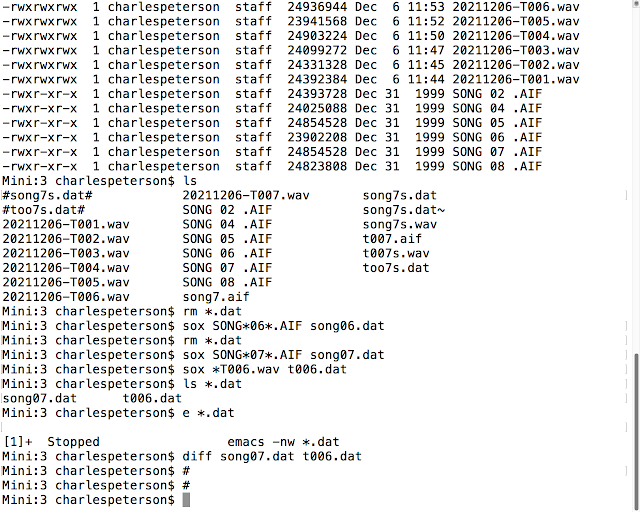

I recorded 30 seconds of music from the FM radio recorded on the Marantz PMD-580 at 48kHz. I then played this over and over through 18 feet of Belden coax (2 pieces joined with an gold RCA double barrel...this is the "line" I have always used to play the PMD-580 on my system...but obviously not "ideal") into different digital recorders also at 48kHz, 24 bits. I transferred the files (either on CD24 or CF) to my Mac. There I used Sox to trim the leading zeros, and trim the end to the maximum shared length, and then to convert to a .DAT file having all the samples as numbers in a DOS text file. Using the text editor Emacs I edit this file in several ways. Primarily I edit to remove the column of time numbers which differ slightly between runs. Just after the "signal" is starting in the digital recording, I look for the first matching line (pairs of values representing the sample value in each channel) in the two files being compared. I edit out the earlier lines which result from one recording not starting as early or as fast as the other (depending on how fast the recorder locks on to the digital signal). Then I also edit out mismatching lines after they both go back to all zeroes as that ending segment will vary depending on how fast the recording was stopped. I then compare two files (typically both from the same recorder) using "diff" (terminal command in bash) to see any mismatches.

I have not yet tried using a word clock cable, but the current results suggest it is necessary for the DA-3000.

Results

For the Masterlink, once an initial sample or so is removed, two separate recordings match perfectly.

For the DA-3000--and with SRC turned off, once 7-14 samples are removed from the beginning of one recording or another (because of slow locking) they match perfectly, Except for about 1 sample added or subtracted every minute.

I could not and did not need to the Marantz PMD-580 digital recorder because the SRC cannot be turned off and so it never records the original values but always interpolated values. The specs for the AD converters aren't very good (and don't sound good to me) but no specs are given for the ASRC and I think it's fine as far as they go. I haven't yet figured out which chip it uses, but obviously an ASRC chip. I have never noticed bad sound when recording the output of the Lavry AD10 running at 96kHz converted to 48kHz by the Marantz, and actually the 48kHz recordings are smaller and more convenient, so it's wonderful for recording FM radio while using a better ADC (like the Black Lion) in front of it. I'd hope for something better for recording vinyl, and it just bugs me that I cannot in principle make bit perfect recordings with this recorder. It will always be subtracting something, measurably but likely imperceptibly, with the ASRC, when it's not really needed for recording to a flash card. Therefore it is never giving a truly honest account of the digital signal, it is always part of the mix. For the purposes of relaxed listening, that may be ok, but for the purpose of Audio Investigations, it is not acceptable.

Conclusion

The digital interface receiver of the Alesis Masterlink appears to be the best I have tested, providing perfect results even in the less-than-perfect test setup. I sure hope the errors with the DA-3000 with the SRC off go away when I add a word clock cable, otherwise I'm returning it.

Chips

Digital interface chips using ASRC

CS 8420 widely used but has well known bugs, must sometimes be restarted, so requires a design with microprocessor that can manage that, despite spec sheet alleging otherwise. It can occasionally go into "garbage mode" or "muffled mode" and when that happens it needs to be restarted. Some have characterized it as "evil." It can be used in one of 9 different modes, including several which are PLL only (no SRC). So you can give the user a choice. But the PLL only mode may be inferior to that on actual PLL interface chips--it's just a pre-smoother for the ASRC. The chip is supposedly discontinued (still widely available it seems) but current designs inspired by it are legion.

I see now that my beloved Behringer DEQ 2496 Ultracurve Pro units use this very chip. I will have to investigate whether they use a PLL or an ASRC mode. Somehow it seems they do preserve input sampling rate at the output. The Ultracurve itself was discontinued in 2021. I know because I've been ordering 1 or 2 a year.

AD 1896 an early reference standard following from the pioneering AD 1890

WM 8805

Nowadays some DAC chips have the digital interface built in, and if so, you can bet it's ASRC. PLL's require more ancilliaries which was why, historically, there was a separate chip receiver. ASRC makes it possible to make everything cheaper.

Digital interface chips using only PLL (Synchronous) only

CS 8416, widely used a decade and a half ago and still, has 8 digital inputs, often only 1 used.

CS8414, a slightly inferior predecessor to 8416 (though some say the reverse).

CS8412, earlier generation, regarded by some as the best of the 84xx series.

AK 4117, possibly very slightly better than 8416 if you can find it. I'm not sure of the design of later AK interface chips.

https://www.diyaudio.com/forums/digital-source/73446-dac-design-first-step-spdif-receiver-print.html

TI DIR9001 Possibly the best PLL chip of all at least when it was introduced. People were saying this wouldn't be available after 2005, but it appears that you can still buy it new online. So, it seems now, the designs and redesigns that were inspired by the widely feared impending demise of this chip were wrongly inspired. HOWEVER, it is limited to 96kHz digital inputs. So I say, what's wrong with that? What high end gear that is not terminal (like a DAC) should do is use a real PLL chip for the PLL modes, not the crappy PLL of a chip mainly designed as ASRC. And then if PLL doesn't bother with anything above 96kHz, like the great PLL chips of old (and still now apparently) that's fine by me.

The Alesis Masterlink uses AKM chips so it seems likely it uses the AK 4117 receiver (or earlier generation of same) which is said to be better than any in the Crystal Semiconductor CS841x series. I wonder if the Tact 2.0 RCS uses the TI DIR9001 which is a high performance chip made by an American company like the Tact itself. I have never directly compared the two but generally they both work well. I've only rarely used the digital inputs on the Masterlink (unlike the PMD 580 it has decent sounding built in analog to digital converters that I've used instead), or even used it at all (I don't make recordings very often), whereas for 20 years I've endlessly used and tried to do impossible things (such as 100 foot cables) with the Tact, so I know the Tact is slightly less robust in locking to 88.2kHz than at 96kHz with long cables, possibly having nothing to do with the chips used.

TI DIR1703 was an early buggy version of DIR9001 from the mid-to-late 1990's.

[Update December 7, 2021]

Tascam DA-3000 Passes Bit Perfect Test with Clock Cable

I finally had time on December 5th and 6th to retest the Tascam DA-3000 using a clock cable, as I correctly surmised that it needed.

I could not do a repeat of the previous tests, except with the clock cable, as I had originally planned (and why I bought a 15 foot clock cable).

What made the test impossible is that the Marantz PMD-580 does not have any clock inputs and outputs. The Marantz is built with the philosophy that an ASRC operating all the time is just dandy. It is not possible to turn it off. You never get the exact original bits, but the Marantz meets its noise and distortion specs using ASRC. For many purposes (except high end audio and audio investigations) that is Good Enough.

So I had to set up an even more elaborate test using the Lavry AD10, which is the important thing for me in actual use. What I wanted to do all along is want to record the bits from the Lavry AD10, a very respected and nice sounding analog to digital converter which is still being sold as a new product for about 50% more than the DA-3000 itself. I have always used the Lavry in the Living Room system to digitize vinyl records into my digital front end just for playback. It was the best analog to digital converter I could afford (and still).

Since I already digitize vinyl simply for playback on my system, I would have thought an inexpensive device could simply capture those bits perfectly from SPDIF and record them to a Compact Flash card (or better yet, a USB memory stick). Unfortunately such an inexpensive device does not seem to exist. Inexpensive recorders actually tend to have no digital inputs or outputs at all, and definitely not AES balanced digital inputs and outputs. Earlier generations of the Tascam had no way to turn off the SRC, just like the Marantz PMD-580. The only commercial bit-perfect recorder that I know of other than the DA-3000 is the Masterlink ML-9600, which hasn't been made in almost 2 decades and is cumbersome to use, requiring the burning of a CDROM for every data transfer. It's also too noisy to use in the Living Room, I decided years ago (though I now understand a tricky DIY SSD upgrade is possible, and that is said to eliminate the noise which I previously believed was caused by a non-removable fan).

I did use the Masterlink for all my earlier vinyl transcriptions, before I had vinyl playback in the Living Room. In the bedroom, I arranged the masterlink so it's vent holes (I figured there was a fan there, but it might be just the harddrive) pointed away from the turntable, or at a different elevation, so it was never much a problem. In the living room, the noisy vent holes of the Masterlink would be inches away from the turntable, not a good situation. That was what led me to acquire the Marantz PMD-580. It was only after I acquired it on eBay that I discovered you could not turn the ASRC off, which is contrary to my goals.

I had figured I'd repeat the PMD-580 to DA-3000 test, but just with a clock cable. That would be holding everything else constant, except for the clock cable. And then I'd test copying bits from the Lavry, the real goal, after that because it's a more complicated test.

But I had to skip straight to the more complicated, but also more important (like actual usage) test.

To do that, I connected the DA-3000 clock output to the Lavry clock input, with "Word Clock" selected on the front panel of the Lavry. When it is receiving an external clock signal it can handle, the corresponding sample rate light lights up. It is pointless to preselect the sample rate when you are using an external clock.

The AD10 has a Clock Input which accepts either Word or AES clock. The DA-3000 has word clock inputs and outputs. I do not need to change the clock setting of the DA-3000 since the work clock output is always active at the sample rate currently selected.

The AES digital output of the Lavry already goes through a Henry Engineering 4 way AES splitter (which operates like a little line amplifier buffer with zero delay) so that I can play on my system while recording, previously on the Marantz. But now, to record on the Tascam, I ran AES cables from the splitter to both the DA-3000 and the Masterlink ML-9600.

So, whatever I record, I record it identically to both recording devices. If the recordings turn out to be identical, then they must both be bit perfect. I already proved the Masterlink is bit perfect, but even if I didn't know that, identical recordings on Masterlink and DA-3000 would prove they were both bit perfect, since they could not match if they weren't.

The pink noise track from the Stereophile Test CD 2 was playing over and over on the Oppo BDP-205, and the balanced analog outputs of the Oppo were feeding the Lavry analog inputs, with the Emotiva XSP-1 in between doing level setting and buffering. So I'm not recording the actual bits on the CD, I'm recording the bits produced by the Lavry converting the analog signal which originated at the disc player. Every recording will be different from the previous one, because the recording never starts at the exact same instant of the signal, but if both recorders are bit perfect, the corresponding recordings made from the two machines machine in the same session should match.

I recorded this track 7 times on both the DA-3000 and the Masterlink. I primed both machines by pressing their record buttons. (On the masterlink, I initially had to select playlist 1, then playlist edit, then every time I also had to press new track, before pressing record. As I said, the Masterlink is cumbersome to use.) When there was a gap in the playback of noise, I pressed Play on both machines (which is what you do to start recording, because the record button only engages "Record Pause"). Then when the track ended, I pressed Stop. And so on, seven times. I did it more than once in case I messed something up, and it's easy to keep doing once you get rolling.

As it happened, when I made the transfer CD24 disc on the Masterlink (containing all the 24/96 digital recordings) there had been one song from the previous tests still in the playlist. I thought to myself "no problem, I'll just remember that when comparing."

As it turned out, by the time I was actually messing with all the files on my Mac, I forgot about that difference in track numbering. And as a result I spent 30 minutes trying to make two files that would never match (because they were different recordings from a virtual analog source) line up because I could not find matching pairs of numbers. I got very frustrated and angry. But then I remembered I had to test a higher number Song from the Masterlink to the one from the Compact Flash which came from the DA-3000.

Once I got the two digital recordings to line up in time, and then removing the time counters from the files, using the method I've described before, they matched perfectly. 40 seconds of digital data at 24/96, almost 4,000,000 24 bit samples for two channels without a single difference.

The terminal on my computer screen looked like this:

(The two pound signs shown after the diff command were my initial faulty attempts to do a screenshot.)

Having passed the test, on the evening of December 6 I removed the Masterlink for storage and "permanently" set up the Tascam DA-3000 as my living room recorder.

No comments:

Post a Comment